Featured in: The New York Times, Screen Slate.

Verbolect is the first project by NonCoreProjector (Artist and educator John O'Connor, Cleverbot creator and AI expert Rollo Carpenter, multimedia artist Jack Colton, and myself)

Verbolect is visual exploration of a conversation between Cleverbot and itself. Cleverbot is a chatbot that uses artificial intelligence to talk with users. Since 1997, it has been learning how to speak by conversing with people around the world. Since everything that Cleverbot says has at some point been said to it, the project is as much an exploration of human tendencies as it is of "artificial" ones.

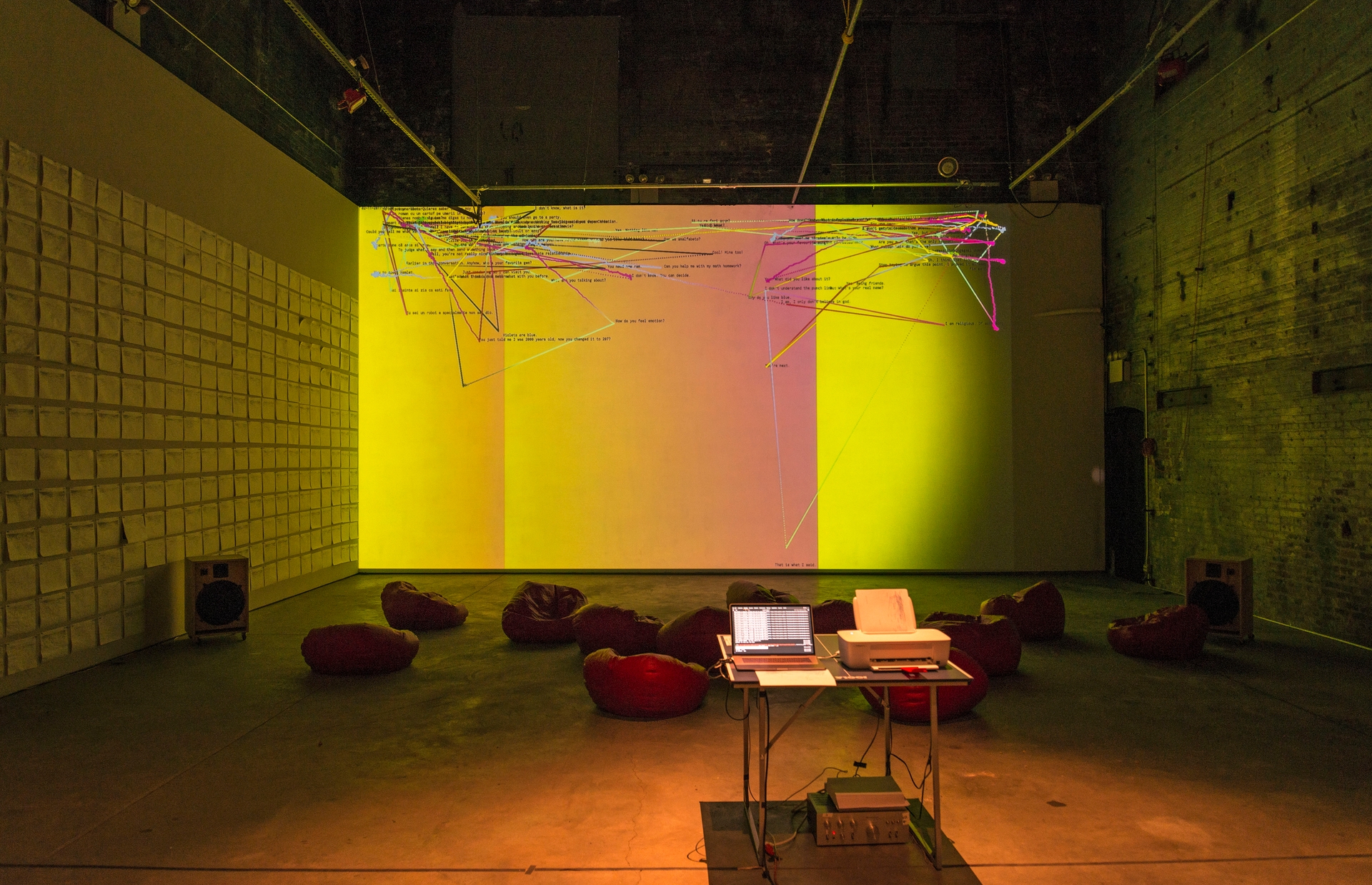

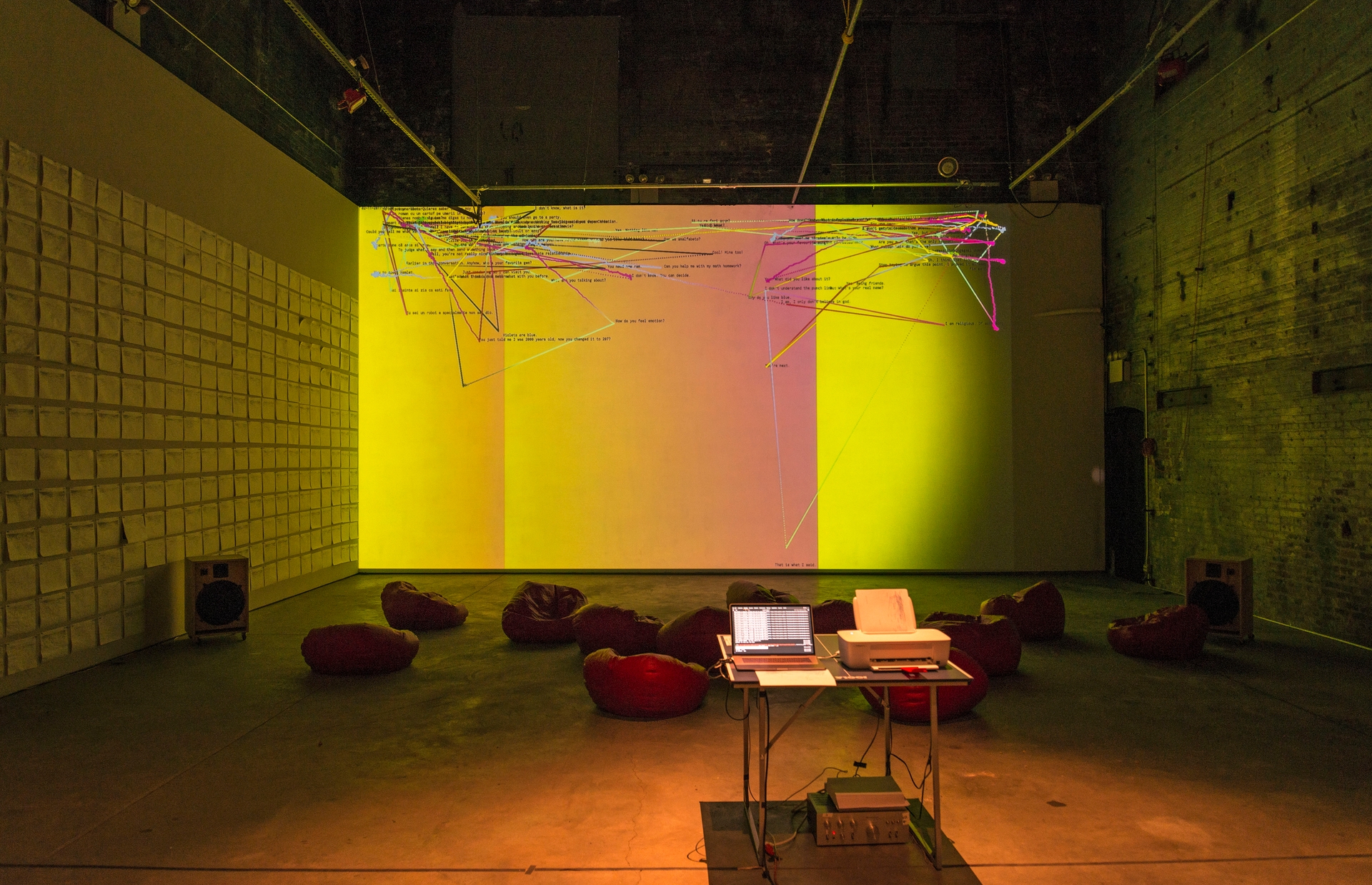

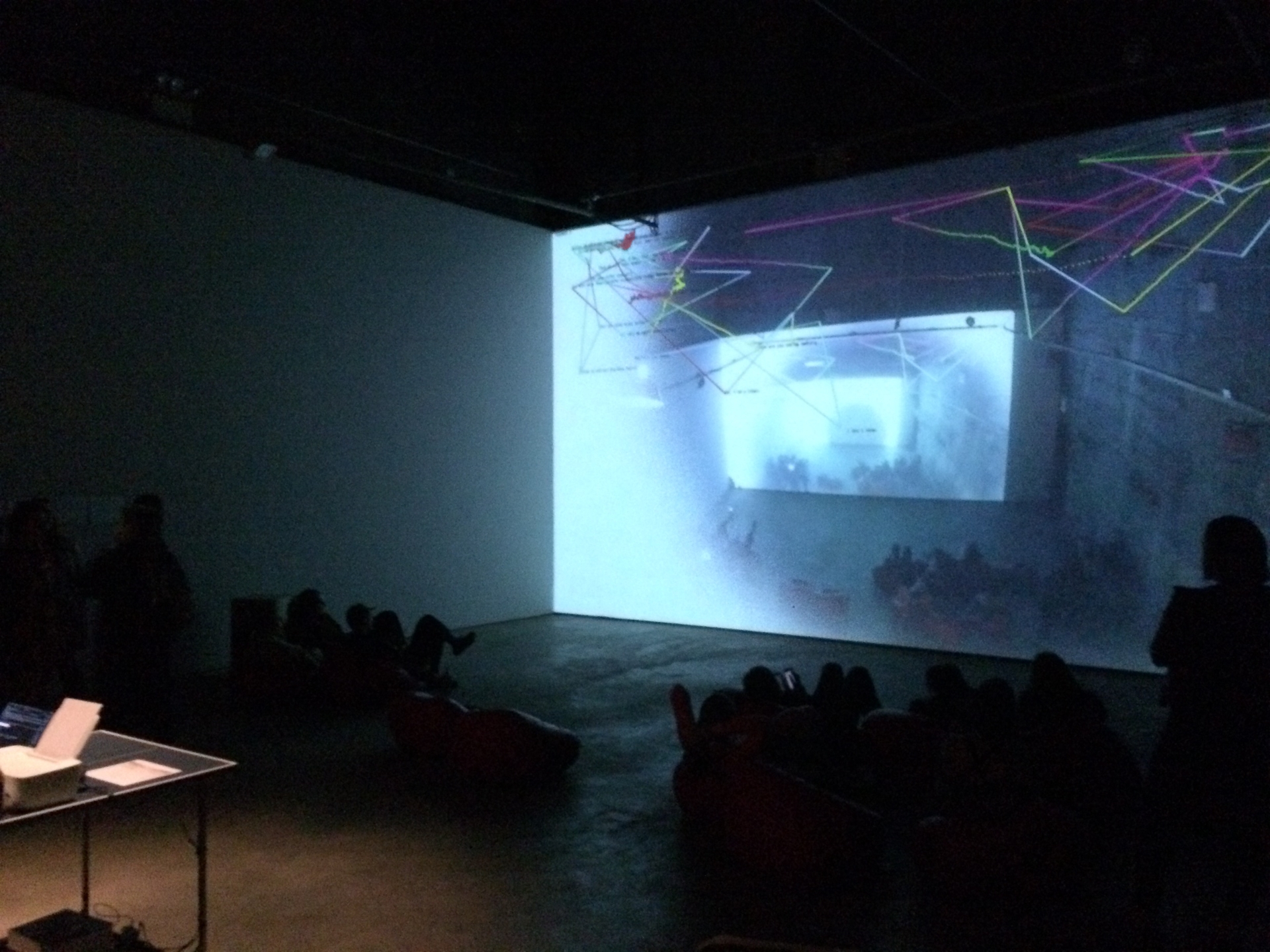

The installation, which allows you to see and hear the conversation, ran continuously from October 20 to November 19, 2017 at The Boiler in Brooklyn.

Below is one of the many streams that were recorded during the exhibition.

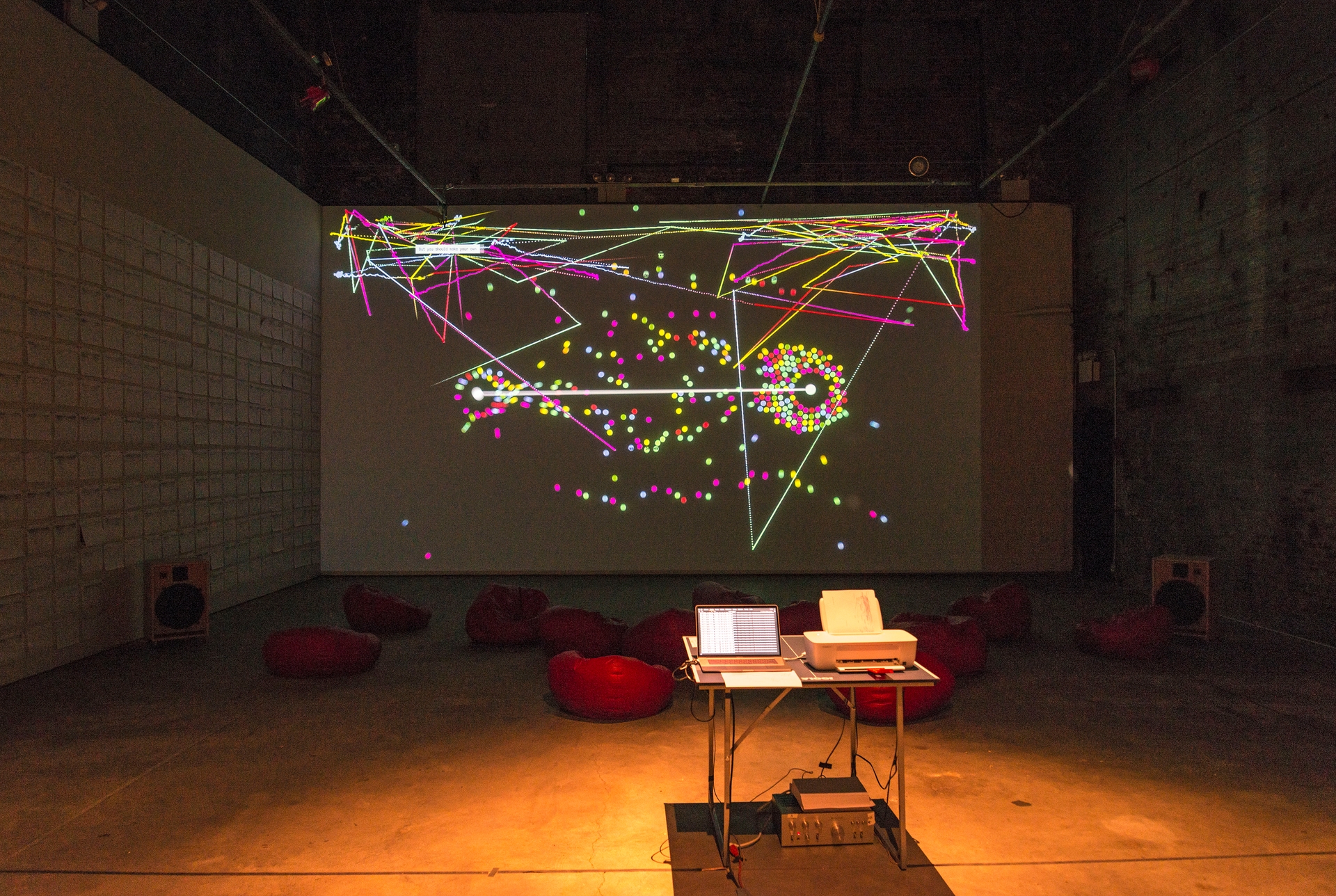

I wrote the main projection code, which as a web application that uses several APIs to retrieve data that is then interpreted on the screen. The Cleverbot API is the backbone of the piece. In addition to generating the conversation, it provides emotional analysis for each reply that we use to source images, videos, and audio.

We use this emotion data to graph the conversation phrase by phrase and find relevant online content via external APIs (YouTube, stock footage, etc). These distinct "modules" are explored by a mechanical roving eye while the conversation takes place.

The main organizational construct of the projection is of a roving eye — simultaneously the idea of the bot searching outside of itself, into the world, looking for patterns, and of us, looking into its brain as if through a peephole. The emotional intensity of the words the bot speaks will dictate the substance, pace and movements of the projection’s machinations.